Most of the literature that I've read on the topic does not attempt to put an actual number on what level of intelligence, exactly, would qualify as "Superintelligence". Given that there is much discussion of when we might expect an AI Superintelligence to emerge as of late, we probably should first define where that threshold will be crossed...

At the present moment, the intelligence quotient (IQ) remains the quantitative standard by which we evaluate overall level of cognitive ability within the mainstream psychological community. And so I will use IQ as a benchmark for evaluating AI Superintelligence for this market.

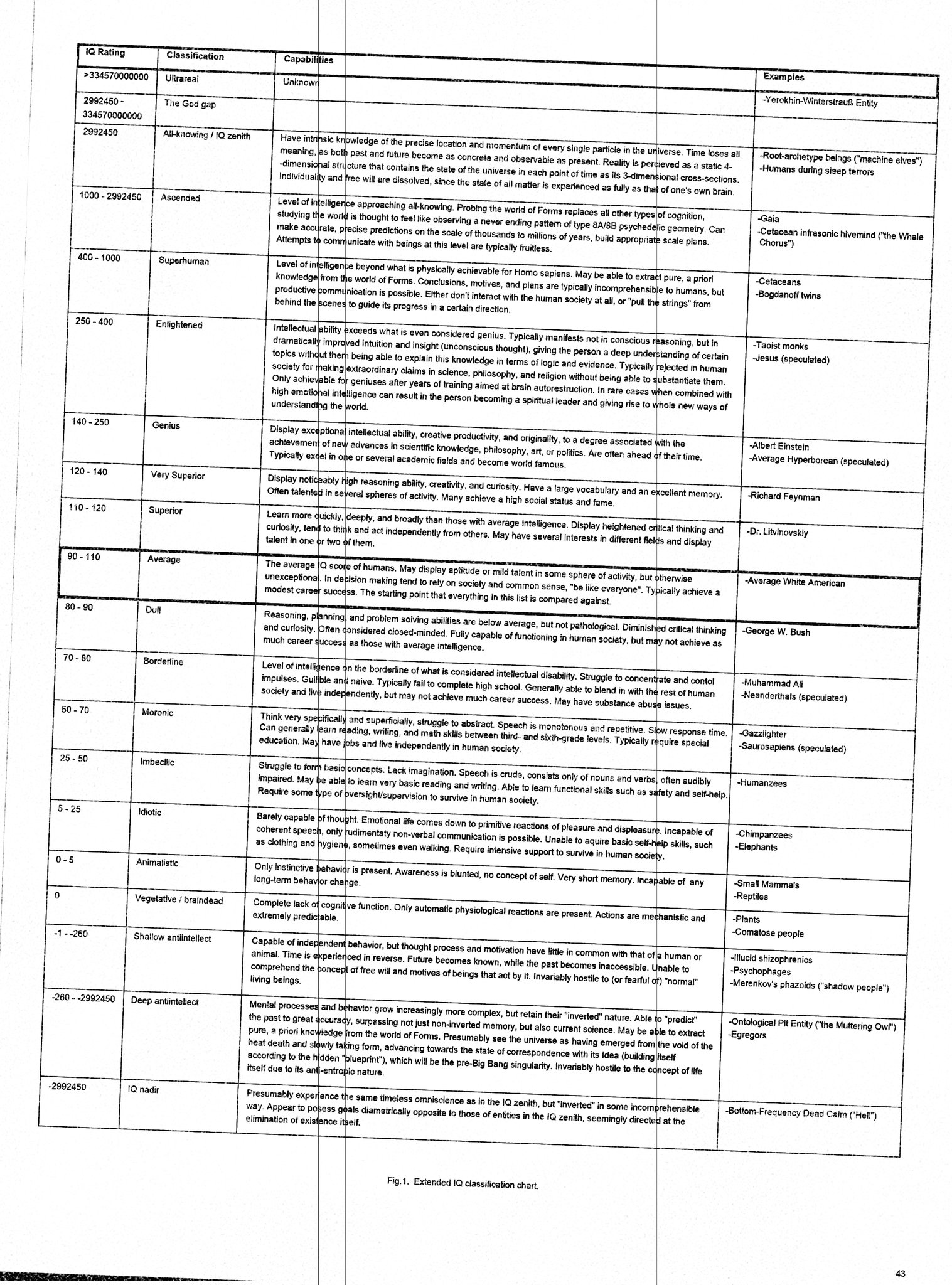

For reference:

The average IQ of a human is set to 100.

The average IQ of a chimpanzee is roughly 25.

The average IQ of most physicists (widely regarded as the most intelligent population) is somewhere between 120 - 135. More speculative figures can range as high as 160+.

The highest IQ ever recorded by a human is 228, by Marilyn Vos Savant.

It is unknown what the maximum theoretical IQ that a human could possess.

So at minimum, one could say that an AI Superintelligence could be defined as having an IQ higher than the maximum theoretical value that may be possessed by a human. Where that range lies is highly uncertain, so I will propose the following benchmarks:

Approximatley 250, or roughly 2.5x higher than the average human (and well above the highest recorded IQ)

An IQ of ~460, which is more than double than the highest recorded human IQ.

An IQ of 2280, which is 10x the highest recorded human IQ

Other, or the maximum theoretical IQ however defined.

I will leave open the option to add your own suggestion.

As it is largely speculative, and I'm only seeking how most people should define Superintelligence (instead of predicting when we will see one emerge), the poll will close before the beginning of 2026.

I strongly encourage everyone to outline their reasoning in the comments section below...

People are also trading

If the leaks were right GPT4's base model is somewhere on the boundary between shallow and deep antiintellect as measured by one of OpenAI's airgapped joint alignment taskforces.

The whistleblower went silent around the time GPT5's base model should have finished training. Presumably scaling laws held and the expected prediction capabilities emerged, immediately burning him.

In short - can you add negative versions of all options, or should we treat them as already negative?

This is a deeply wrong question. IQ works in humans because within humans all components of intelligence are positively correlated. Current AI has a pretty different cognitive architecture such that very strong (already superintelligent, even) capability in domains which are highly predictive of general intelligence in humans (vocabulary knowledge, word association, etc) does not imply anything like the level of capability at reasoning/pattern recognition/learning which humans display. You could perhaps argue something about more capable (on any important axis) designs converging on unique mathematical solutions to the problems of intelligence which result in them being increasingly similar, but I do not think this is likely and I doubt you can have ideal inductive biases without tradeoffs somewhere.

You could come up with some more outcome-oriented or adversarial benchmarks (convert win rate versus other systems into elo) if you really want to map onto real numbers, but they seem hard to define and might not generalize perfectly or capture everything we care about.

Also, do you actually know how IQ is defined? Doubling it is dimensionally invalid. It's basically just a different way to write a percentile - it's normally distributed with mean 100 and standard deviation 15.

Yes I do, and it is discussed in another comment (particularly the debate whether one can meaningfully derive a value of higher than about ~160).

Nonetheless, two points:

We use human intelligence as the standard when assessing the performance of AGI and AI Superintelligence. Well, bits and pieces of it anyway. We should therefore discuss IQ as this is currently the way that overall human intelligence is measured.

Deriving a meaningful IQ from other animals such as chimpanzees as been attempted and published. As well as for rats. The methods by which this was done can be debated, sure, but there seems to be a general consensus that it can be universally applicable.

A lot of the objections raised so far revolve around how to measure it, and whether it can be functionally equivalent to IQ however defined. So I’ll delve a little bit into that.

It’s challenging for animals since they don’t have language, and so far we don’t have a good way of separating out conditioned responses vs genuine problem solving except in very narrowly defined cases. An AI would present its own challenges; some things it would be able to do vastly better than human beings, sure, so an IQ test meant for humans probably wouldn’t yield anything useful. On the other hand, the human brain also does a vast amount of calculations that isn’t directly accessible to the conscious mind, such as how to balance a bike. So it is unclear if an AI is in fact superior to the brain in this regard…

I don’t think it is an insurmountable problem though. In practice, I expect that measuring the “IQ” for an AI intelligence would be akin estimating the horsepower for an engine. A 500 HP engine probably isn’t directly equal to the power output of 500 horses combined (in fact I think it is less), but it is a useful enough measure that one can express the power output of an engine in terms of the number of “equivalent horses”.

@Meta_C The reason we use IQ in humans is because human intelligence is captured decently by that one variable and that it correlates well with things we care about, not because it's a 'true definition' of anything. It will not generalise out of distribution like you want - saying a chimpanzee has IQ 25 tells you very little about their capabilities (which are greater than ours in short-term memory, even). If you already have an idea of what an ASI is and are trying to pick an IQ threshold to match, just use your working definition of an ASI directly.

@osmarks Correction: apparently chimpanzees are not better than us in short-term memory (chimpanzees got training the humans being compared to don't, apparently).

If we follow your logic except without your irrelevant anthropocentrism, let's define a thinking agent as "superintelligent" if it can do long multiplication twice faster than current computers. By this definition, computers will become superintelligent in the next 18 months. While a human will never beat computers at that task; the gap is 10 orders of magnitude. And, hypothetically, even if humans improve their long multiplication speed a billion times, good luck trying to use that human multiplier, instead of a general-purpose computer, for transmitting and showing pixels of silly cat memes over high-speed telecom protocols.

AI are not, and will not be human. Do you get that? Not. Human. In the same way as cyanobacteria are not elephants. Elephants cannot live off the sunlight and cannot survive in open ocean, and in general are total newbs in the whole survival and adaptation business. Cyanobacteria are so perfect that they've survived for 2.7 billion years, and are still thriving in insane numbers -- while elephants are going extinct. Good luck finding a meaningful map of these two species to ℝ, as you are attempting for AI and humans.

@YaroslavSobolev So then, what do you propose as an alternative to measuring overall level of intelligence? At the moment I am inclined to use IQ for this task, in lieu of any alternative benchmarks…

@Meta_C Ideally some more specific metric.

IQ works nicely in part because humans share a bunch of the same hardware. So visual puzzled being solved efficiently by more intelligent humans are correlated with each other notably which is nice for putting it on a scale.

There is not much use in using IQ for super intelligence unless you construct rules for how it is measured that actually reflect the differences in capabilities among different superintelligences. Just comparing it to humans doesn't really matter, you just go for 'really big number'.

Ex: for non superintelligences like GPT-4 it has a high ability to understand and use words, has a decent knowledge retention from its dataset (humans have terrible on this scale), mediocre agent optimization (it isn't good at actually being manipulative but it can reasonably model other agents), very low ability to retain new concepts but can learn them decently within the context window, low goal directedness, low coherency due to being text generation and not having a true guiding memory/goal underlying what it makes.

Etcetera.

Ideally things vaguely like this are what we would use. Of course super intelligence still just has a 'this gets a higher number than a human' but it lets you compare non superintelligences better, gives you more aces to talk about how far humans vary which can be extended to non human entities reasonably well, and let's you potentially compare close to superintelligences decently.

Does it even make sense to apply the concept so far out of the original range where it was defined? Operationally, how do you distinguish 300 from 250?

I don't know much about how IQ tests work, but I suppose the typical test is a list of tasks which can be solved correctly or not. If two entities solve all the tasks correctly this does not mean that they have the same level of intellectual ability. They just happen to be out of the test range, but one could still vastly outsmart the other.

I would say that yes, it should still make sense, as it is intended to scale and there has been no theoretical limit identified as to how high it can go. But yes, there is indeed considerable debate over whether IQ has meaning or can be reliably measured above a certain point (around ~160). So far the debate remains largely unsettled. And so I will use IQ as the benchmark for this task for lack of a better alternative.

WAIS-IV is the standard test that is used to test for general intelligence in adults, so one can use the benchmarks there to get an idea of what an IQ of 250+ might be like, and compare it to 200, 300, and so on.

You may be interested in reading about William Sidis, who was said to have an IQ between 250 and 300. Although the methodology used to derive that value was a bit different than how it is measured today. One could use him as a loose point of reference if you want to get an idea of what an IQ of 300 might look like in practice:

My view is that AI Superintelligence should be defined as near the maximum theoretical limit. If there is none, then either somewhere between the combined IQ of all humans currently living, and the combined IQ of all humans who have ever lived (approx 100 billion)

@Meta_C That makes even less sense. You cannot take 2 humans and get 200 IQ any more than you can go twice as fast by sticking two cars together.

There is a such thing as collective intelligence. It is as of yet unclear how to add/multiply the individual IQ’s together, or whether it converges towards a particular value given a large enough number of individuals. But the overall intelligence of the group is almost certainly higher than that of an individual.

@Meta_C Groups are sometimes more capable, sure, but not in a way which maps neatly onto IQ. A group probably can't come up with a clever one-shot insight better than its smartest members can, and will suffer from horrible coordination difficulties, but can do better on tasks which parallelize nicely (not all or even that many).