Elon Musk has been very explicit in promising a robotaxi launch in Austin in June with unsupervised full self-driving (FSD). We'll give him some leeway on the timing and say this counts as a YES if it happens by the end of August.

As of April 2025, Tesla seems to be testing this with employees and with supervised FSD and doubling down on the public Austin launch.

PS: A big monkey wrench no one anticipated when we created this market is how to treat the passenger-seat safety monitors. See FAQ9 for how we're trying to handle that in a principled way. Tesla is very polarizing and I know it's "obvious" to one side that safety monitors = "supervised" and that it's equally obvious to the other side that the driver's seat being empty is what matters. I can't emphasize enough how not obvious any of this is. At least so far, speaking now in August 2025.

FAQ

1. Does it have to be a public launch?

Yes, but we won't quibble about waitlists. As long as even 10 non-handpicked members of the public have used the service by the end of August, that's a YES. Also if there's a waitlist, anyone has to be able to get on it and there has to be intent to scale up. In other words, Tesla robotaxis have to be actually becoming a thing, with summer 2025 as when it started.

If it's invite-only and Tesla is hand-picking people, that's not a public launch. If it's viral-style invites with exponential growth from the start, that's likely to be within the spirit of a public launch.

A potential litmus test is whether serious journalists and Tesla haters end up able to try the service.

UPDATE: We're deeming this to be satisfied.

2. What if there's a human backup driver in the driver's seat?

This importantly does not count. That's supervised FSD.

3. But what if the backup driver never actually intervenes?

Compare to Waymo, which goes millions of miles between [injury-causing] incidents. If there's a backup driver we're going to presume that it's because interventions are still needed, even if rarely.

4. What if it's only available for certain fixed routes?

That would resolve NO. It has to be available on unrestricted public roads [restrictions like no highways is ok] and you have to be able to choose an arbitrary destination. I.e., it has to count as a taxi service.

5. What if it's only available in a certain neighborhood?

This we'll allow. It just has to be a big enough neighborhood that it makes sense to use a taxi. Basically anything that isn't a drastic restriction of the environment.

6. What if they drop the robotaxi part but roll out unsupervised FSD to Tesla owners?

This is unlikely but if this were level 4+ autonomy where you could send your car by itself to pick up a friend, we'd call that a YES per the spirit of the question.

7. What about level 3 autonomy?

Level 3 means you don't have to actively supervise the driving (like you can read a book in the driver's seat) as long as you're available to immediately take over when the car beeps at you. This would be tantalizingly close and a very big deal but is ultimately a NO. My reason to be picky about this is that a big part of the spirit of the question is whether Tesla will catch up to Waymo, technologically if not in scale at first.

8. What about tele-operation?

The short answer is that that's not level 4 autonomy so that would resolve NO for this market. This is a common misconception about Waymo's phone-a-human feature. It's not remotely (ha) like a human with a VR headset steering and braking. If that ever happened it would count as a disengagement and have to be reported. See Waymo's blog post with examples and screencaps of the cars needing remote assistance.

To get technical about the boundary between a remote human giving guidance to the car vs remotely operating it, grep "remote assistance" in Waymo's advice letter filed with the California Public Utilities Commission last month. Excerpt:

The Waymo AV [autonomous vehicle] sometimes reaches out to Waymo Remote Assistance for additional information to contextualize its environment. The Waymo Remote Assistance team supports the Waymo AV with information and suggestions [...] Assistance is designed to be provided quickly - in a mater of seconds - to help get the Waymo AV on its way with minimal delay. For a majority of requests that the Waymo AV makes during everyday driving, the Waymo AV is able to proceed driving autonomously on its own. In very limited circumstances such as to facilitate movement of the AV out of a freeway lane onto an adjacent shoulder, if possible, our Event Response agents are able to remotely move the Waymo AV under strict parameters, including at a very low speed over a very short distance.

Tentatively, Tesla needs to meet the bar for autonomy that Waymo has set. But if there are edge cases where Tesla is close enough in spirit, we can debate that in the comments.

9. What about human safety monitors in the passenger seat?

Oh geez, it's like Elon Musk is trolling us to maximize the ambiguity of these market resolutions. Tentatively (we'll keep discussing in the comments) my verdict on this question depends on whether the human safety monitor has to be eyes-on-the-road the whole time with their finger on a kill switch or emergency brake. If so, I believe that's still level 2 autonomy. Or sub-4 in any case.

See also FAQ3 for why this matters even if a kill switch is never actually used. We need there not only to be no actual disengagements but no counterfactual disengagements. Like imagine that these robotaxis would totally mow down a kid who ran into the road. That would mean a safety monitor with an emergency brake is necessary, even if no kids happen to jump in front of any robotaxis before this market closes. Waymo, per the definition of level 4 autonomy, does not have that kind of supervised self-driving.

10. Will we ultimately trust Tesla if it reports it's genuinely level 4?

I want to avoid this since I don't think Tesla has exactly earned our trust on this. I believe the truth will come out if we wait long enough, so that's what I'll be inclined to do. If the truth seems impossible for us to ascertain, we can consider resolve-to-PROB.

11. Will we trust government certification that it's level 4?

Yes, I think this is the right standard. Elon Musk said on 2025-07-09 that Tesla was waiting on regulatory approval for robotaxis in California and expected to launch in the Bay Area "in a month or two". I'm not sure what such approval implies about autonomy level but I expect it to be evidence in favor. (And if it starts to look like Musk was bullshitting, that would be evidence against.)

12. What if it's still ambiguous on August 31?

Then we'll extend the market close. The deadline for Tesla to meet the criteria for a launch is August 31 regardless. We just may need more time to determine, in retrospect, whether it counted by then. I suspect that with enough hindsight the ambiguity will resolve. Note in particular FAQ1 which says that Tesla robotaxis have to be becoming a thing (what "a thing" is is TBD but something about ubiquity and availability) with summer 2025 as when it started. Basically, we may need to look back on summer 2025 and decide whether that was a controlled demo, done before they actually had level 4 autonomy, or whether they had it and just were scaling up slowing and cautiously at first.

13. If safety monitors are still present, say, a year later, is there any way for this to resolve YES?

No, that's well past the point of presuming that Tesla had not achieved level 4 autonomy in summer 2025.

14. What if they ditch the safety monitors after August 31st but tele-operation is still a question mark?

We'll also need transparency about tele-operation and disengagements. If that doesn't happen by June 22, 2026 (a year after the robotaxi launch) then that too is a presumed NO.

Ask more clarifying questions! I'll be super transparent about my thinking and will make sure the resolution is fair if I have a conflict of interest due to my position in this market.

[Ignore any auto-generated clarifications below this line. I'll add to the FAQ as needed.]

Update 2025-11-01 (PST) (AI summary of creator comment): The creator is [tentatively] proposing a new necessary condition for YES resolution: the graph of driver-out miles (miles without a safety driver in the driver's seat) should go roughly exponential in the year following the initial launch. If the graph is flat or going down (as it may have done in October 2025), that would be a sufficient condition for NO resolution.

Update 2025-12-10 (PST) (AI summary of creator comment): The creator has indicated that Elon Musk's November 6th, 2025 statement ("Now that we believe we have full self-driving / autonomy solved, or within a few months of having unsupervised autonomy solved... We're on the cusp of that") appears to be an admission that the cars weren't level 4 in August 2025. The creator is open to counterarguments but views this as evidence against YES resolution.

Update 2025-12-10 (PST) (AI summary of creator comment): The creator clarified that presence of safety monitors alone is not dispositive for determining if the service meets level 4 autonomy. What matters is whether the safety monitor is necessary for safety (e.g., having their finger on a kill switch).

Additionally, if Tesla doesn't remove safety monitors until deploying a markedly bigger AI model, that would be evidence the previous AI model was not level 4 autonomous.

Update 2026-01-31 (PST) (AI summary of creator comment): The creator clarified that passenger-seat emergency stop buttons should be evaluated based on their function:

If the button is a real-time "hit the brakes we're gonna crash!" intervention button, this would indicate supervision that could rule out level 4 autonomy

If the button is a "stop requested as soon as safely possible" button (where the car remains in control until safely stopped), this would not rule out level 4 autonomy

This distinction applies to both Waymo (the benchmark) and Tesla. The creator emphasized that mere presence of a safety monitor doesn't rule out level 4 - what matters is whether there is supervision with the ability to intervene in real time.

Update 2026-02-01 (PST) (AI summary of creator comment): The creator has proposed a concrete scenario for June 22, 2026 (the one-year deadline from FAQ14) that would result in NO resolution:

(a) Longer zero-intervention streaks but not to the point that unsupervised FSD is safer than humans

(b) More unsupervised robotaxi rides but not at a scale where tele-operation becomes implausible

(c) Continued lack of transparency on disengagements

(d) Creative new milestones that seem like watersheds but turn out to be closer to controlled demos

Conversely, if Tesla demonstrates a clear step change in autonomy before June 22, 2026 (such as declaring victory, opening up about disengagements, and shooting past Waymo), there would still be a debate about whether Tesla was at level 4 on August 31, 2025, but it would be more reasonable to give Tesla the benefit of the doubt on questions about tele-operation and kill switches.

Update 2026-02-02 (PST) (AI summary of creator comment): The creator has clarified terminology and concepts around supervision and disengagement:

Supervision refers to a human in the loop in real time, watching the road and able to intervene.

Real-time disengagement is when a human supervisor intervenes to control the car in some way - a gap in the car's autonomy. If the car stops on its own and asks for help or needs rescuing, those might count as other kinds of disengagement but not a real-time disengagement.

Evidence threshold: Human drivers have fatalities roughly once per 100 million miles, or non-fatal crashes every half million miles. A supervised self-driving car needs to go hundreds of thousands of miles between real-time disengagements before we have much evidence it's human-level safe.

With less than 100k robotaxi miles, seeing zero real-time disengagements would still be fairly weak evidence that the robotaxis would crash less than humans when unsupervised.

For miles with an empty driver's seat, we need to know:

If safety monitors had the ability to intervene with a passenger-side kill switch

If that kill switch was real-time (like an emergency brake) or just a request for the car to autonomously come to a stop as quickly as possible

If the robotaxis have been remotely supervised (using the definition of supervision from FAQ8)

Update 2026-02-02 (PST) (AI summary of creator comment): The creator has analyzed data suggesting Tesla robotaxis may have markedly worse safety than human drivers, even with supervision. If this analysis is fair, the creator indicates that Tesla's safety record could be too far below human-level to count as level 4 autonomy, regardless of questions about kill switches or remote supervision.

The creator notes that human-level safety has been assumed as a lower bound for level 4 autonomy throughout this market. A safety record significantly worse than human drivers would not meet the level 4 standard, even if other technical criteria were satisfied.

The creator acknowledges a possible Tesla-optimist interpretation: that Musk "jumped the gun" in summer 2025 but may have achieved unsupervised FSD later (possibly January 2026). However, this would still result in NO resolution for this market, since the criteria must be met by August 31, 2025.

People are also trading

My previous comment suggested that Tesla robotaxis have too few miles accumulated for us to know they're safe, even with a perfect safety record and zero real-time disengagements. Well, good news and bad news...

In James's market on whether Tesla will surpass Waymo in 2026, @TimothyJohnson5c16 posted some analysis from Electrek claiming that Tesla robotaxis do have enough miles to make meaningful claims about their safety.

Unfortunately, their claim is that the robotaxis, even with whatever amount of supervision they have, are markedly worse than human drivers.

I thought Electrek might be too biased a source, but, well, good news and bad news there too, if we believe Wikipedia's article on Electrek. Electrek are historically huge Tesla fanboys. It's safe to say that that's changed in recent years though. The article mentions the owner divesting from Tesla in 2020. Anyway, presumably we can't assume they're just making all this up. I'm anxious to hear counterarguments. Of course Tesla continues to make this hard by being tight-lipped about all crashes and incidents.

Waymo of course is the opposite of all of this. With their 100+ million miles and pretty complete transparency about every incident, we know Waymos are way more safe than human drivers. And because Waymos are unsupervised we know they're at zero real-time disengagements over all those miles. What Waymo has is car-initiated (i.e., AI-initiated) remote assistance. Meaning if the car is confused, it autonomously stops and calls home and a human tells it what to do.

I don't know if Tesla robotaxis ever get confused like that. If they never do, I would imagine three possible explanations:

Tesla's FSD is more advanced than the Waymo Driver, as they call it

The robotaxis are doing too few miles for those edge cases to come up

The robotaxis have dedicated remote supervisors preempting car confusion

What I had not imagined until now was the explanation that Tesla robotaxis just crash a lot more than even human drivers, let alone Waymos.

I've just been assuming this whole time that human-level safety is a lower bound -- that no robotaxi operator would tolerate safety that bad. At human-level, I'd think it only a matter of time before a PR disaster big enough to destroy the whole program. Like the tragic incidents that killed Uber's and Cruise's self-driving programs. Even much less tragic incidents would be disastrous, I'd have thought.

So if this Electrek analysis is at all fair, it's quite the bombshell. We could arguably give Tesla the benefit of the doubt on both kill switches and remote supervision and still say that their safety record is too far below human-level to count as level 4 autonomy. (After all, even a 1973 Dodge Dart can be fully self-driving, unsupervised, if we don't quibble about how quickly it crashes.)

Maybe the Tesla-optimist take here is that Musk jumped the gun last summer but unsupervised FSD really is close, or was finally achieved in December or January, and if you look at the numbers starting then, they'll tell a different story. Which would still leave us at a NO for this market but leaves plenty of room for exciting trading in James's market and others. (So far I'm personally at less than 33% over there, on Tesla overtaking Waymo in 2026, and am betting accordingly.)

I went to the primary source on this and learned things:

Tesla reported 9 robotaxi incidents in the period for which NHTSA provides data (2025 Jun 16 to Dec 15)

For 4 of those, the robotaxi was going 2mph or less

Only 1 involved injuries (minor)

Here are what I believe to be the most complete characterizations of the incidents we can get from the NHTSA data:

July, daytime, an SUV's front right side contacted the robotaxi's rear right side with the robotaxi going 2mph while both cars were making a right turn in an intersection; property damage, no injuries

July, daytime, robotaxi hit a fixed object with its front right side on a normal street at 8mph; had to be towed and passenger had minor injuries, no hospitalization

July, nighttime, in a construction zone, an SUV going straight had its front right side contact the stationary robotaxi's rear right side; property damage, no injuries

September, nighttime, a robotaxi making a left turn in a parking lot at 6mph hit a fixed object with the front ride side of the car, no injuries

September, nighttime, a passenger car backing up in an intersection had its rear right side contact the right side of a robotaxi, with the robotaxi going straight at 6mph; no injuries

September, nighttime, a cyclist traveling alongside the roadway contacted the right side of a stopped robotaxi; property damage, no injuries

September, daytime, a stopped robotaxi traveling 27mph [sic!] hit an animal with the robotaxi's front left side, no injuries [presumably "stopped" is a data entry error]

October, nighttime, the front right side of an unknown entity contacted the robotaxi's right side with the robotaxi traveling 18mph under unusual roadway conditions; no injuries

November, nighttime, front right of an unknown entity contacted the rear left and rear right of a stopped robotaxi; no injuries

All the other details are redacted. I guess Tesla feel like they have a lot to hide? The law allows them to redact details by calling them "confidential business information" and they're the only company doing that, out of roughly 10 of them. Typically the details are things like this from Avride:

Our car was stopped at the intersection of [XXX] and [XXX], behind a red Ford Fusion. The Fusion suddenly reversed, struck our front bumper, and then left the scene in a hit-and-run.

I.e., explaining why it totally wasn't their fault, with only things that could conceivably be confidential, like the exact location, redacted. So I don't think Tesla deserves the benefit of the doubt here but if I try to give it anyway, here are my guesses on severity and fault:

Minor fender bender, 30% Tesla's fault (2mph)

Egregious fender bender, 100% Tesla's fault (8mph)

Fender bender, 0% Tesla's fault (0mph)

Minor fender bender, 100% Tesla's fault (6mph)

Minor fender bender, 20% Tesla's fault (6mph)

Fender bender, 10% Tesla's fault (0mph)

Sad or dead animal, 30% Tesla's fault (27mph)

Fender bender, 50% Tesla's fault (18mph)

Fender bender, 5% Tesla's fault (0mph)

Those guesses, especially the fault percents, are pulled out of my butt. Except the collisions with stationary objects, which are necessarily 100% Tesla's fault. But if we run with those guesses, that's 3.45 at-fault accidents. Over how many miles? More guessing required! I believe that for a while, all Tesla robotaxi rides had an empty driver's seat. But starting in September, Tesla added back driver's-seat safety drivers for rides involving highways. Or more than just those? We have no idea. We do know of cases of Tesla putting the safety driver back when the weather was questionable. In any case, only accidents without a safety driver in the driver's seat are included in this dataset, so we do need to subtract those miles when estimating Tesla's incident rate.

(I'm pausing here in case anyone has thoughts before I keep going and try to get to a bottom line on what we can infer about Tesla's level of safety from this data. I don't yet know how it will turn out. I can certainly imagine, if it ends up looking ok for Tesla after all, that some of us will be like, "yeah but those sneaky bastards probably aren't complying with the reporting requirements whenever they think they can get away with it". So plenty of assumptions will still be built in but I'm super curious what this data is going to tell us.)

@dreev

6 and 9 stopped robotaxis not sure why you aren't assigning 0% Tesla fault to these.

7 animal could be anywhere from 0% to 100% Tesla fault so I would probably just assign 50% rather than 30%.

Anyway my assessment seems to end up with near identical fault apportionment in total so no complaint/disagreement so far.

@ChristopherRandles My thinking is that, for example, maybe the Tesla veered in front of the cyclist and stopped. Being at 0mph at the moment of collision is good evidence of not being at fault, but not dispositive. But I agree we should treat these as mostly if not completely the other vehicle's fault. Good point about the animal too; I agree it could be anything from 0-100 so might as well call it 50%. I guess I was aiming for some kind of reasonable amount of benefit of the doubt. But there's probably no way to make that feel satisfactory so I'm now thinking that we need to just stay apples-to-apples by comparing incident rates for all these autonomous car companies without trying to estimate fault.

That should be fair because bad luck should average out and effect them all equally.

@dreev Electrek has been negative on Tesla and FSD specifically in the last few years. I've asked GPT5 to score the last 20 Electrek articles on Tesla: "Average “Tesla-fan score” across these 20 articles: ~22/100 → on this recent slice, Electrek reads decidedly non-fan / frequently adversarial toward Tesla/Musk"

The most recent 4 headlines (to get an idea)

- Feb 5, 2026: Tesla drops plans for robo-charging site for the SF robotaxis it doesn’t have

- Feb 4, 2026: Tesla’s new FSD push looks like a gross money grab: they have questions to anwser

- Feb 4, 2026: Tesla UK sales plunge 57% in January as BYD races ahead

- Feb 4, 2026: Two Tesla board members are all over the Epstein files: what happens next?

@dreev Fun fact that came out about Waymo: their remote assistance is in the Philippines https://x.com/niccruzpatane/status/2019213765506670738

@MarkosGiannopoulos 👍 📈 💯 is my reaction to that news. If there were any doubts that they might be cheating with remote assistance, this just makes that all the more unlikely, right? You're not gonna have real-time tele-operation from literally the other side of planet.

These headlines that try to cast it as "truth comes out: Waymos are tele-operated" are so infuriatingly disingenuous. (But not nearly as outrageous as all the "Waymo hits child" headlines this week. "Waymo saves child's life by nudging it to sidewalk" would be less misleading. Maybe I'll title today's AGI Friday that way, in protest.)

Anyway, it's ultimately irrelevant where the remote assistance humans are physically located, right?

@dreev Video made clear there were some in US and some in Philippines. Could that make you wonder why split it? Is it that Philippines deal with stopped vehicles with camera close to confusing objects and cars never drive at more than 5mph while needing that assistance but US staff deals with higher speed driving? TBH, I would think it is more likely that (more expensive) US operators are probably more for developing and writing up procedures to train Philippines staff rather than higher speed driving assistance.

Claimed cars were in charge of driving and remote assistance was just one of the inputs used. Doesn't sound like remote driving to me.

Yes pretty much irrelevant where Waymo staff are. Finding out whether Tesla remote assistance staff were not in US might be more interesting but I expect they are in US and so we won't find that out. If we did, my immediate suspicion would be that it is customer call centre assistance for passenger enquiries rather than it being for remote driving.

Anyway level 4 permits some circumstances to be outside the software ability to be safe so that assistance in some unclear circumstances is ok.

@ChristopherRandles One reason might be that the Philippines team does the night shift (16 hours difference from California). But given the reluctance of the Waymo executive to say if the majority of the staff is in the US or not, it might be just that they are trying to save costs. Gemini calculated Waymo might have 600-1200 employees on remote assistance, given 2500 cars and a humans/cars ratio between 1:10 to 1:20

Here's a review of a common point of confusion about supervision and tele-operation, which I'm adding because I had an idea for better terminology.

Supervision (as in "Supervised Full Self-Driving") refers to a human in the loop in real time, watching the road and able to intervene. What if they're able to intervene but never do? In principle that's ok. Abundance of caution, belt and suspenders. We just need to get specific about "never". Human drivers have fatalities something like once per 100 million miles, or something like every half million miles for non-fatal crashes. So a supervised self-driving car needs to go hundreds of thousands of miles between real-time disengagements before we have much evidence it's human-level safe.

A real-time disengagement is when a human supervisor intervenes to control the car in some way -- a gap in the car's autonomy. If the car stops on its own and asks for help or needs rescuing, those might count as other kinds of disengagement but not a real-time disengagement.

With less than 100k robotaxi miles, it wouldn't be enough to see zero real-time disengagements. That would still be fairly weak Bayesian evidence that the robotaxis would crash less than humans when unsupervised. Tesla is actually getting close to a million cumulative robotaxi miles but most of those are with a safety driver in the driver's seat and they're not telling us the disengagement rate. So there's just no evidence either way based on those miles.

And for the miles with an empty driver's seat, this is why we need to know if the safety monitors have had the ability to intervene with a passenger-side kill switch and if that kill switch was real-time, like an emergency brake, or just a request for the car to autonomously come to a stop as quickly as possible. Likewise, we need to know if the robotaxis have been remotely supervised, using the this definition of supervision.

More unsupervised rides happening today https://x.com/tesla2moon/status/2017637536332390439

https://x.com/reggieoverton/status/2017669854015225925

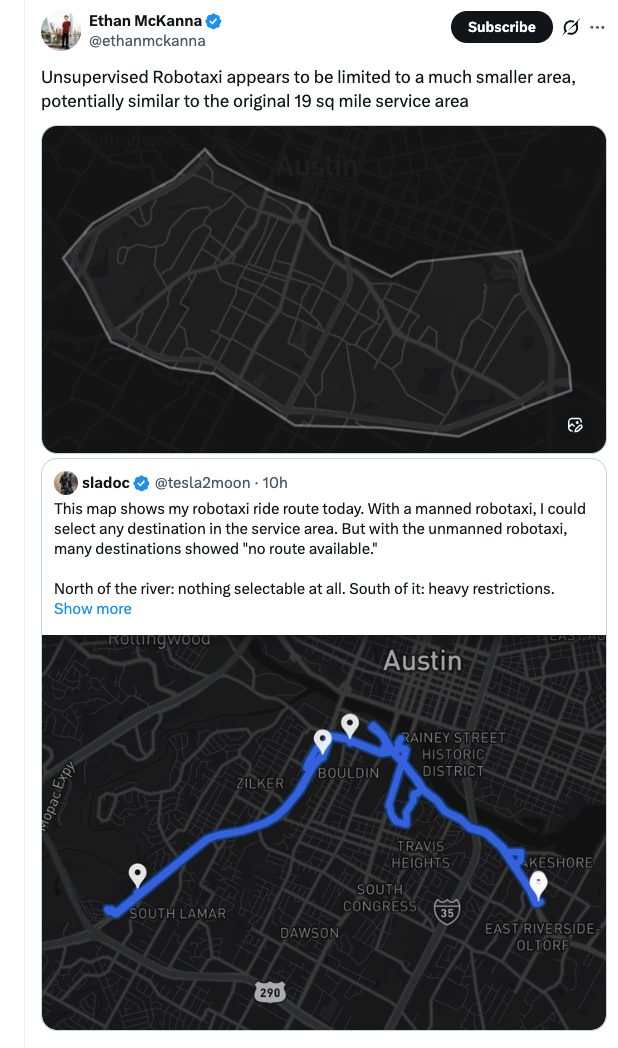

Seems like the unsupervised rides are happening inside a part of the current service area https://x.com/ethanmckanna/status/2017799555920634201

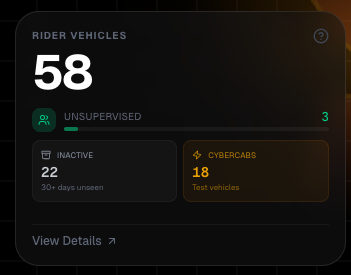

Update today! David Moss (the lidar salesman who achieved the first coast-to-coast trip with zero-interventions) got a fully unsupervised robotaxi ride on attempt number 58. No chase car. He then booked a different ride with a different robotaxi, using a friend's account, and it was also fully unsupervised (modulo the open question of tele-operation).

So I'd say that's evidence that the removal of safety monitors is more than a one-off demo, as I was becoming suspicious of. If scaling starts happening and private Teslas start hitting disengagement rates closer to 1-in-100k miles then we may have to dive in on debating whether Tesla was at level 4 with the robotaxis on August 31. I see plenty of evidence against it, like what @ChristopherRandles has been saying about FSD versions, not to mention Elon Musk himself saying that unsupervised autonomy was still "a month away" in November. But we'll want to hear the Tesla bulls out.

Again, I think what we're waiting on now is scaling up to the point that tele-operation is implausible.

Finally, to review how Tesla could've pulled off the robotaxi service we've seen so far if they haven't been at level 4 all along, I believe it would be some combination of:

Tele-operation (not to be confused with remote assistance, allowed for level 4)

Safety monitors with physical kill switches

Low enough mileage to not hit edge cases

The market description says we presume NO, not level 4 by August 31, 2025, if we don't have transparency about tele-operation and disengagements "soon" after August 31. To pin that down, how do people feel about June 22, 2026 -- one year after the robotaxi launch?

@dreev Re: Tele-operation. What's the point of having a safety passenger if Tesla had people driving remotely?

@MarkosGiannopoulos Network lag? Or for plausible deniability, like if they got caught tele-operating they could say "yes, well, we never said this was fully unsupervised, did we? what? Musk said that? well he didn't mean fully literally unsupervised". Or just redundancy, I guess. Key word "guess" though. I'm just super 🤨 based on what we've seen so far. I'm not sure what we're seeing today from Tesla is at parity with where Waymo was in 2017. Not to say that means Tesla will need 9+ years to catch Waymo.

Anyway, I want to make sure I'm not moving goalposts. Always being like "but what if they're tele-operated?" is in danger of doing that. On the other hand, if Tesla doesn't scale this up and if private Tesla owners continue to not be able to safely read a book in the driver's seat then at some point we're forced to conclude that Tesla has not cracked level 4. Are you comfortable committing to June 22, 2026, as a line in the sand for this market? As in, if we have neither transparency about tele-operation/disengagements nor sufficient scale that such transparency is irrelevant then on June 22 we presume a NO for this market.

@dreev I don't mind the deadline, but how do you describe "we have neither transparency about tele-operation/disengagements nor sufficient scale that such transparency is irrelevant"?

I can safely say

a) Tesla will not disclose any tele-operation because it's completely against how they describe the service. It would be completely disastrous for them.

b) Tesla already posts some safety statistics for FSD, but they will only post Robotaxi-specific safety data when they look good. Their mode of operation is only to post negative-looking data when they are legally required.

c) As for private customers, it's illegal to read books and drive, so you won't see this happening much on a level to be convinced unsupervised FSD is real.

What I believe remains (that can both happen and be verified) to settle this. E.g. it is a clear fact, not us quibbling over a specific word of a Musk statement/tweet

a) Tesla gets a licence from the Texas DMV sometime in spring, according to the new law for autonomous vehicles

b) Tesla has 10+ (20?30?) unsupervised cars (current count is 3) in Austin doing paid rides

c) Tesla starts the California DMV process to get an autonomous license

@MarkosGiannopoulos Good points! I think I disagree with (c) in particular, in an important sense. Namely, people like David Moss and Dirty Tesla are doing honest reporting on exactly how good FSD is getting. Plus the crowdsourced data on disengagements. I think we'll be able to have a decent sense of when FSD is approaching the threshold where it would be safe to read a book, were it legal. (And I kind of expect people to start doing it, legal or not, when they're convinced it's safe enough.)

@dreev I am not following this market anymore so I did not read all the comments and maybe you're already aware, but I think it's worth pointing out that David Moss was caught lying about using a second account to get his second robotaxi ride. Note the screen saying "welcome David" on his second ride. (His friend is named James.)

>"Tesla has 10+ (20?30?) unsupervised cars (current count is 3) in Austin doing paid rides"

If 30 unsupervised cars requires 30 teledrivers then I think this bombshell news would break in some manner. There would be no competitive advantage over traditional taxis if this were to remain. I don't expect this to be the case as Tesla wouldn't be pushing ahead so much if they were this far away from level 4.

If it was 30 unsupervised cars requires 3 teledrivers to handle cases where the software is least sure what to do and the plan is to reduce the ratio of teledrivers to cars over time then this seems much harder to rule out and less likely to be revealed.

I don't think this is likely as I don't think they are likely to be doing it for customer FSD and the customer FSD is getting considerable milage without critical disengagements.

I am almost at the point of wanting to concede the question should be resolved without much further evidence on teledriving in order to bring attention back to whether Tesla was at a level 4 level in August 2025 and when this was launched. However I should respect @dreev wish to follow whatever course seems appropriate to judge the question.

@dreev "I think we'll be able to have a decent sense of when FSD is approaching the threshold where it would be safe to read a book, were it legal." - But you will still have people arguing that the Robotaxi in June '25 had an older/less capable version of FSD than what private customers will be running in 2026.

@MarkosGiannopoulos Yeah, that could get messy. I'm just anticipating possible worlds in which we won't have to open that can of worms. Like if things have only changed incrementally by June 2026 then I believe we're agreed it'll be fair to presume NO despite lacking proof that last summer's robotaxis were below level 4. I can imagine it being frustrating for YES holders if the step change in autonomy finally happens just a bit later in 2026. But that's why we're giving it a full year from the 2025 launch before presuming NO.

And for anyone just tuning in, to echo @MarkosGiannopoulos, if we do see such a step change in autonomy before June 22 then we still have to have the whole debate about whether Tesla was at level 4 on August 31, 2025. So the incremental-vs-step-change question is only about our deadline for presuming NO.

To stay as concrete as possible, imagine the following possible world on June 22, 2026, which is in fact the one I predict for Tesla's robotaxis (assuming Musk doesn't relent on lidar/radar, not that anyone expects him to):

(a) Longer zero-intervention streaks but not to the point that unsupervised FSD is safer than humans

(b) More unsupervised robotaxi rides but not at a scale where tele-operation becomes implausible

(c) Continued lack of transparency on disengagements

(d) And I guess creative new milestones that seem like watersheds at first but turn out to be closer to controlled demos -- like the autonomous delivery.

I propose that it's fair to call that "only incremental changes" and that we'd have an easy NO in such a possible world. At the other extreme, if Tesla declares victory tomorrow, opens up about disengagements etc to prove it's the real deal, and proceeds to shoot past Waymo, then -- well, even then there's a debate to be had about when they hit level 4. I agree with Markos that Tesla is highly unlikely to be transparent retroactively. But in that possible world it will suddenly feel much more reasonable to give Tesla the benefit of the doubt on all these questions about tele-operation and kill switches and such.

@WrongoPhD Oh no, I had a good feeling about that guy! I'm inclined to give him the benefit of the doubt until hearing his response to the allegation. Or maybe it's moot by now. Plenty of people are getting (physically) unsupervised rides in the meantime, I think. Still a tiny fraction, but not a one-off thing either, from what I can glean from people tweeting about it.

It now occurs to me, someone should try sitting in the passenger seat on one of these unsupervised rides and see what happens if you hit that infamous door-open button.

On the FSD version of Robotaxi

"A variant of the software that's used for the robotaxis service was shipped to customers with v14 and customers saw a huge jump in performance."- Ashok Elluswamy, Q4/2025 earnings call

This confirms Tesla has at least two branches of FSD (I think HW3 and Cybertruck customer cars run slightly different versions as well), and the timing of releasing customer version 14 is not very helpful for this market. Robotaxi was much earlier (June) running on a "v14-like" FSD version.

@MarkosGiannopoulos I suspect we are both to some extent seeing what we want to see to argue our case here.

To me is there 'A variant of the software'? - Well yes, the robotaxi has to integrate with the system for requesting a robotaxis and details of the journey being passed to the taxi assigned and integrating with call centre assistance. This makes it 'a variant' from customer owned FSD cars software. Into the robotaxi software (or the customer FSD command and control structure), I would expect them to be able to add any version of the driving software. I wouldn't expect there to be completely different versions of the driving software.

If they are releasing new diving software versions every couple of weeks to customer FSD and sometimes more frequently than that, I don't see the robotaxi software being noticeably more than 4 weeks ahead of customer FSD because this would mean they are updating the FSD software to versions that are already out of date and could be skipped.

If the robotaxi software is only ~ 4 weeks or less ahead of customer FSD and monitorless driving started 22 Jan 2026 then it would appear that that software wasn't ready for real world testing at 31 Aug 2025.

So to me it looks like the driving software wasn't ready by August 2025 and it wasn't launched until 22 Jan 2026. (This assumes they have reached the level now which might be disproved though I don't particularly expect this I hope it goes well.)

@ChristopherRandles "I don't see the robotaxi software being noticeably more than 4 weeks ahead of customer FSD"

You have no factual base for this assertion.

Excellent debate; thanks again for this, both of you. My thinking is that whenever we hit cases like this where we have to decide how much benefit of the doubt to give to Tesla (and agreed that this is often a crux of these disagreements) then the answer is to wait and hope the truth comes to light and makes the debate moot.