The total power consumption could be estimated to be around 50-60 million kWh for training GPT-4.

1/10th of this energy = 5-6 million kWh

1/100th of this energy = 0.5-0.6 million kWh

See calculations below:

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ521 | |

| 2 | Ṁ113 | |

| 3 | Ṁ67 | |

| 4 | Ṁ27 | |

| 5 | Ṁ17 |

People are also trading

@mods Resolves as YES. The creator's account is deleted, but DeepSeek v3 is much better than the original GPT-4 and was trained with an energy consumption of less than 5 million kWh.

Detailed calculation:

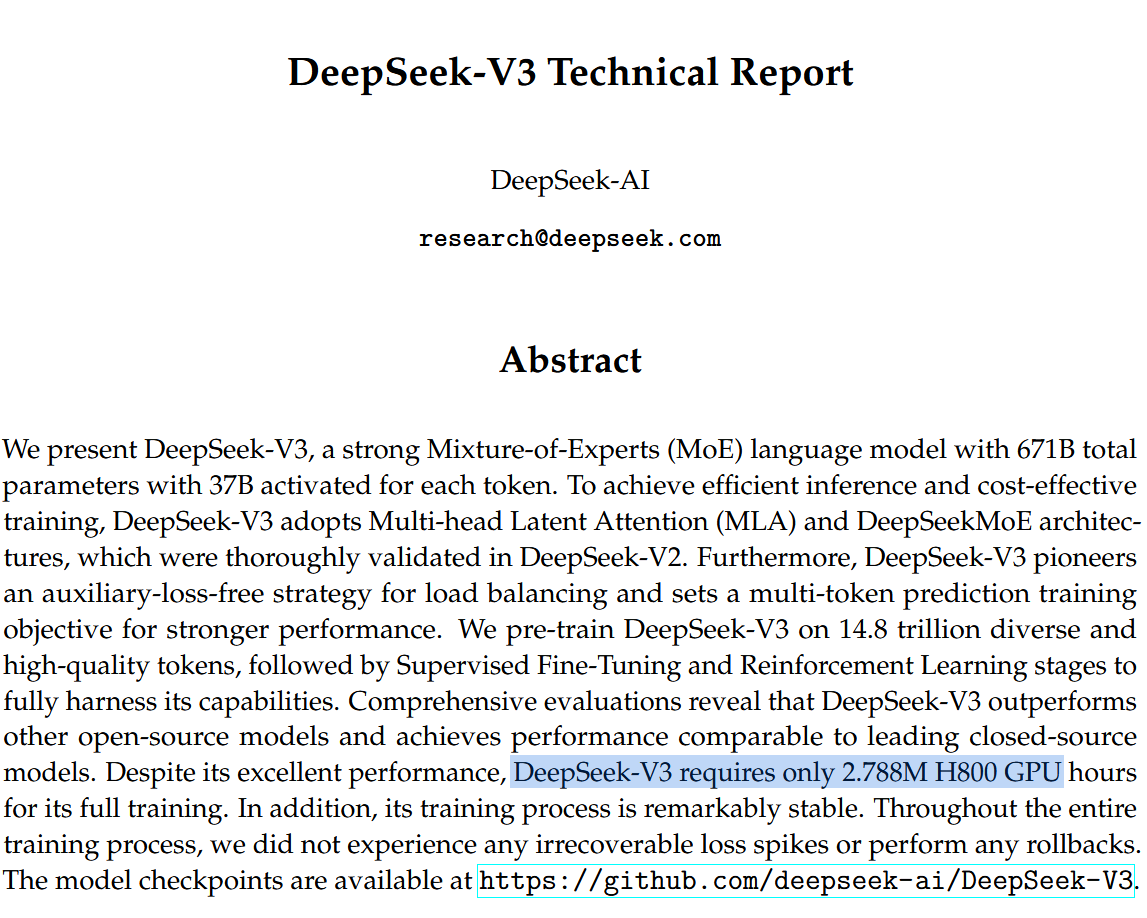

From the paper https://github.com/deepseek-ai/DeepSeek-V3/blob/main/DeepSeek_V3.pdf, we find that DeepSeek V3 required only 2.788 million H800 GPU hours for its full training.

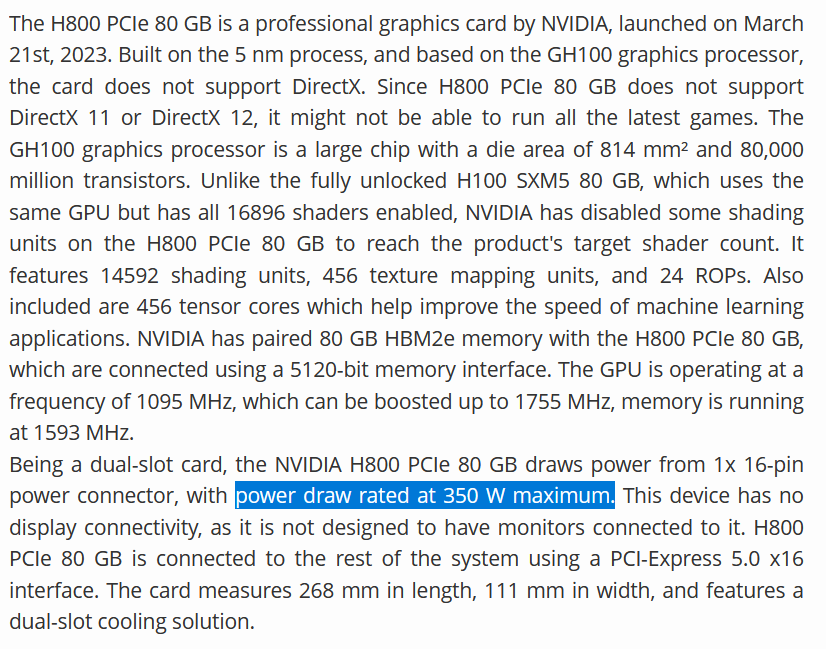

The H800 GPU has a maximum power draw of 0.35 kW, see https://www.techpowerup.com/gpu-specs/h800-pcie-80-gb.c4181

Thus, the GPUs used at most 0.9758 million kWh (= 0.35 kW × 2.788 million hours) during training. Accounting for system power draw and other inefficiencies, we apply a factor of 2 to estimate an upper bound of at most 2 million kWh in total energy consumption for training the model. This is clearly below the 5 million kWh threshold required for resolving this market as YES.

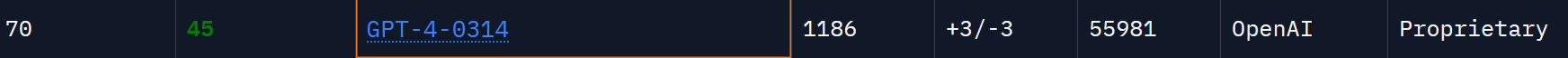

DeepSeek is not only as good as the original GPT-4 but is much better, see https://lmarena.ai/

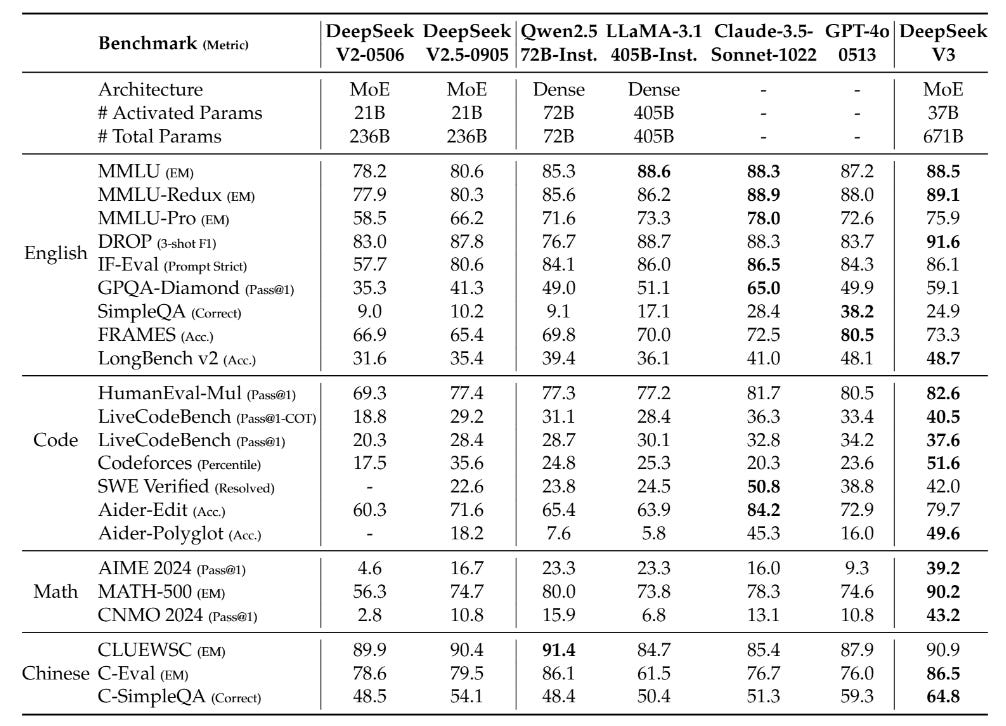

@mods It has been a few days, and there are no objections to the resolution proposal above. The calculation is not rocket science. Due to sanctions, the Chinese face significant compute limitations, so they designed an extremely efficient model that can be trained using less than 4% of the compute required for the original GPT-4. This model easily meets the criteria for a YES resolution. It even outperforms GPT-4o, the successor to GPT-4 and OpenAI's current strongest low-latency model.

Source: https://arxiv.org/pdf/2412.19437, page 31