Will an LLM with less than 10B parameters beat GPT4 by EOY 2025?

15

Ṁ260Ṁ1.8kresolved Jan 10

Resolved

YES1H

6H

1D

1W

1M

ALL

How much juice is left in 10B parameters?

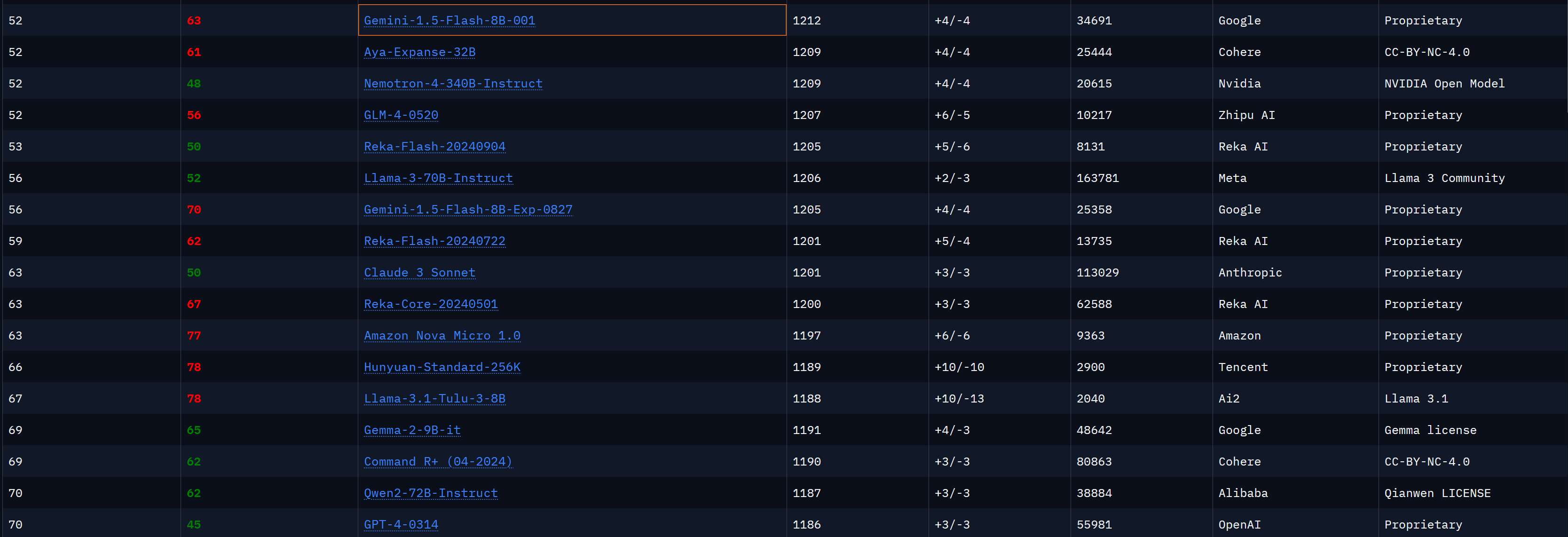

the original GPT4-0314 (ELO 1188)

judged by Lmsys Arena Leaderboard

current SoTA: Llama 3 8B instruct (1147)

This question is managed and resolved by Manifold.

Market context

Get  1,000 to start trading!

1,000 to start trading!

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ47 | |

| 2 | Ṁ44 | |

| 3 | Ṁ32 | |

| 4 | Ṁ26 | |

| 5 | Ṁ23 |

People are also trading

Sort by:

bought Ṁ500 YES

@mods Resolves as YES.. The creator's account is deleted, but Gemini 1.5 Flash-8B easily clears the bar (ELO higher than 1188) with an ELO of 1212, see https://lmarena.ai/

@singer There is a without refusal board on lmsys. The disparity between main and that is how much advantages not having censorship’s give u