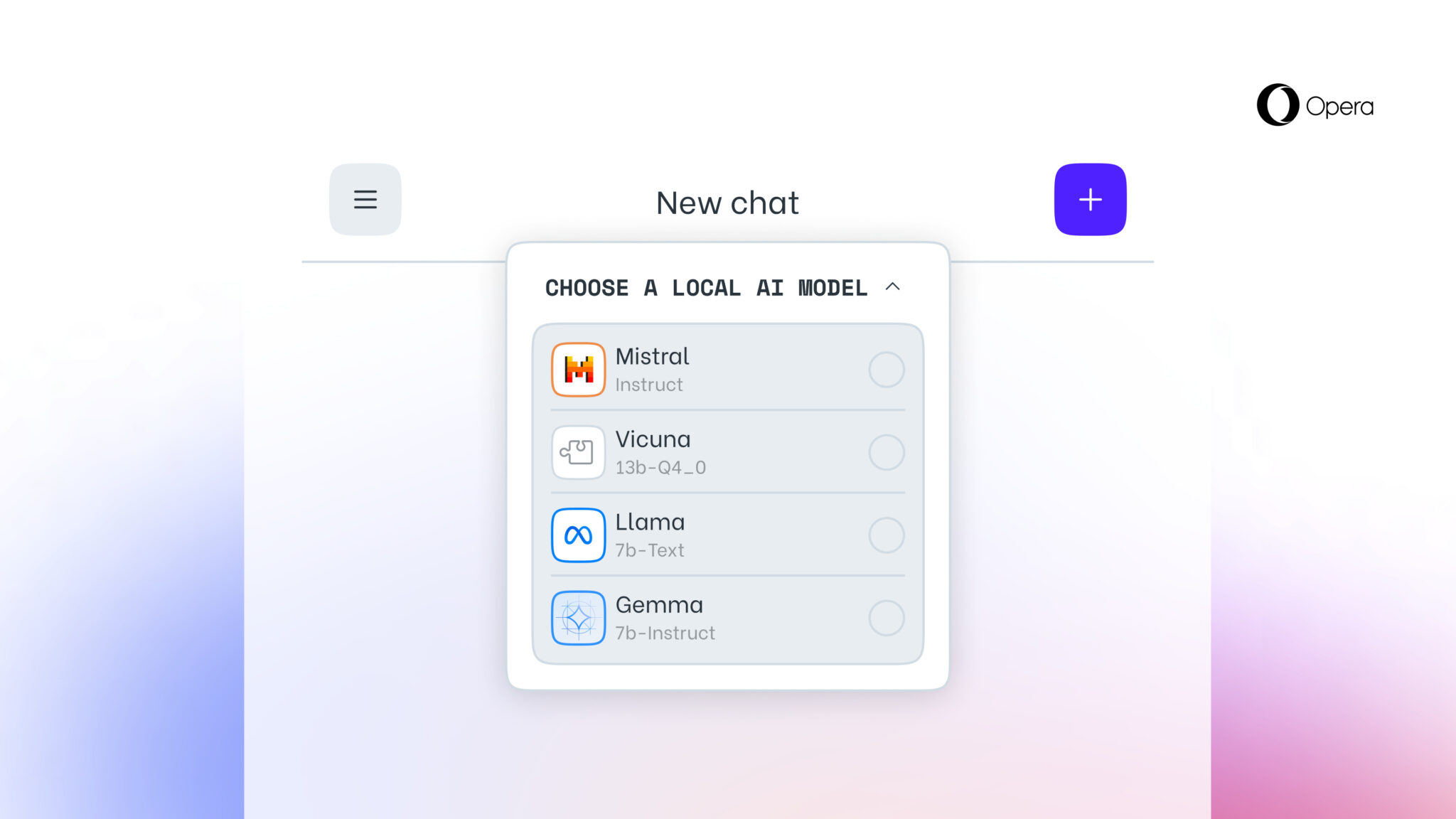

Opera issued a press release titled "Opera becomes the first major browser with built-in access to local AI models."

Will any other major browsers follow suit*?

* Access in beta or dev mode would suffice to resolve this market as yes

Update 2024-15-12 (PST): Canary (experimental) versions of browsers do not count - only beta or dev versions will qualify for resolution. (AI summary of creator comment)

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ552 | |

| 2 | Ṁ401 | |

| 3 | Ṁ361 | |

| 4 | Ṁ331 | |

| 5 | Ṁ331 |

People are also trading

Seems to require a locally running Ollama server: https://brave.com/blog/byom-nightly/

Not sure if that fits the definition of "native support for [only] local LLMs" (as it's a generic mechanism allowing to use either local or remote models via an HTTP API).

@Soli apologies for the incorrect comment. The built in support for Gemini Nano is actually in regular Chrome right now. It was added in version 131. Here are the links: https://developer.chrome.com/origintrials/#/view_trial/1923599990840623105

@jonathan21m looks promising but i still need to validate when i have more time tomorrow.

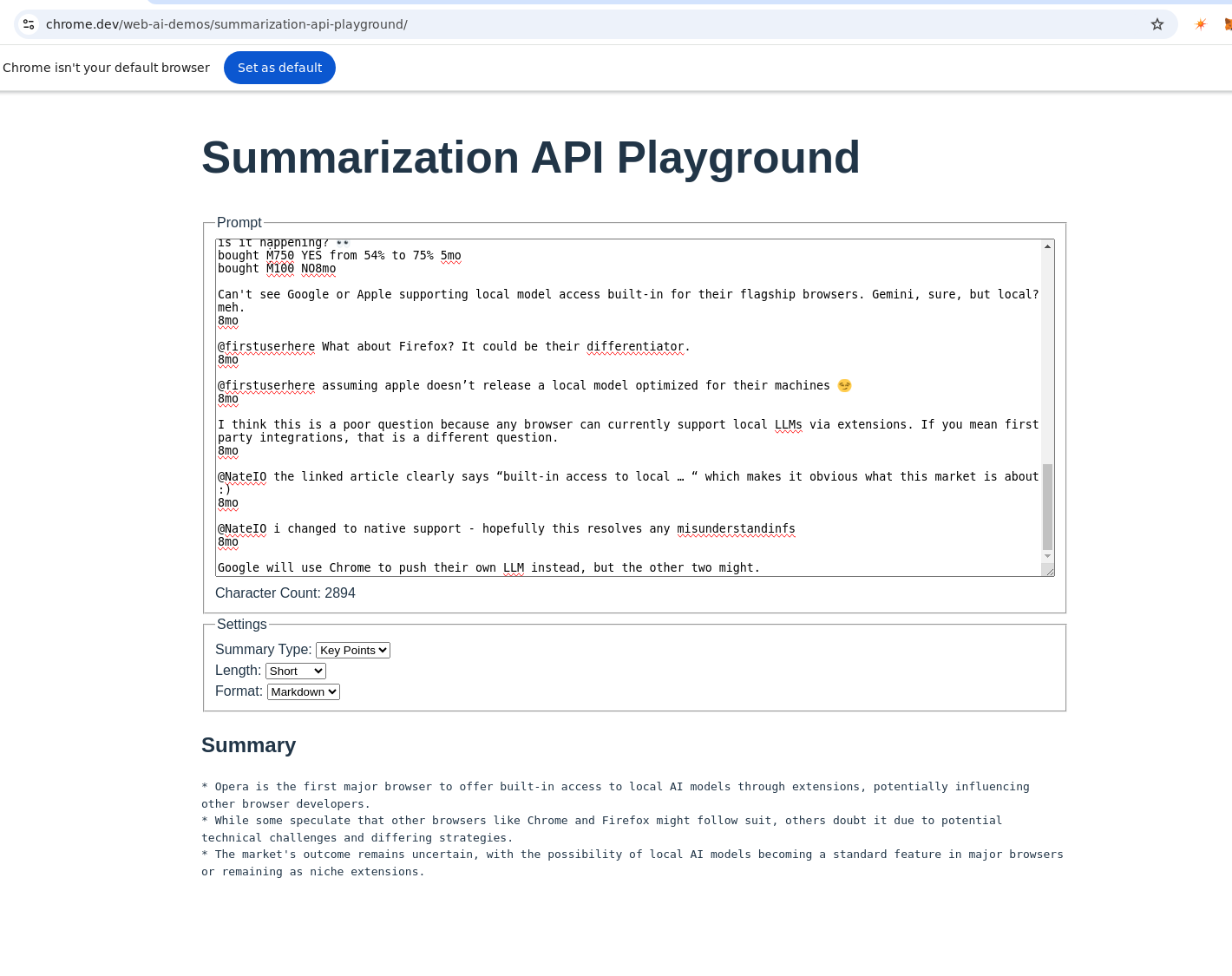

The Summarizer API uses a powerful AI model trained to generate high-quality summaries. While the API is built into Chrome, the model is downloaded separately the first time a website uses the API. [source]

@Soli Tested on Chrome stable 131 by visiting https://chrome.dev/web-ai-demos/summarization-api-playground/ , it works and used local tensorflow lite XNNPACK according to console logs.

@jonathan21m Implementation status: In developer trial (Behind a flag); this is three steps behind "Prepare to Ship"

@marvingardens but it's also in origin trial status where you opt in to have it work on your domain (origin) in stable and demonstrably usable on stable.

@lxgr It is in the trial, getting added to the trial is a short form and was immediately/automatically approved.

@Lun Cool, thank you!

I'd argue this should still count, given that the market explicitly accepts beta/dev versions, and this works even with the production/live release as long as the origin has opted in to the API (which is not something I, as a visitor, have to do anything for).

@Soli I don't know about Chrome (as I don't use it) but Firefox has a similar feature. No objections to resolving YES (I sold my NO).

@Soli Oddly enough people keep buying YES, firefox is pretty slow with such features so is chrome! Maybe Safari might do it, but apple is generally behind on the curve so that seems even more unlikely.

The reason vivaldi,edge,opera do well with feature geeks is because they add new stuff faster.

Firefox doesn't even have tab groups for context! Chrome has nowhere near the integrations of edge.